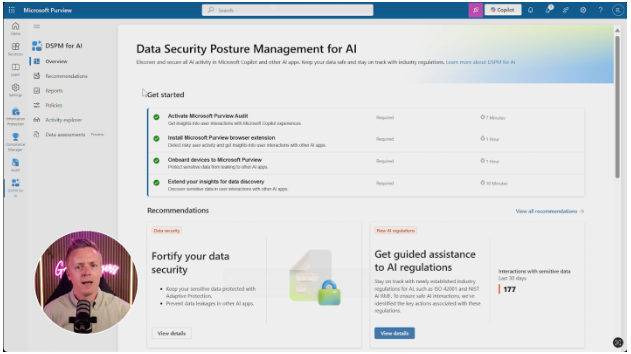

To get started with Purview DSPM, you’ll need a Microsoft 365 E5 or E5 Compliance licence. Once that’s sorted, you’ll have access to a dashboard with several key areas.

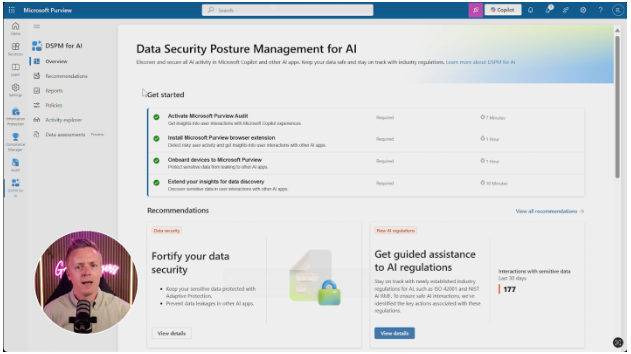

Overview

When you first log into the Microsoft Purview DSPM dashboard, you’ll be greeted with a neat overview that gives you immediate insight into AI usage across your organisation.

Here you’ll find:

- A ‘getting started’ section that guides you through initial setup steps, which might include deploying browser extensions or onboarding devices into Purview

- Some key metrics showing AI usage across your organisation

- Indicators of potential risk areas that need your attention

- Quick links to the most common actions you’ll need to take for strong data governance

From here, you can use the menu on the left to visit any of the following sections.

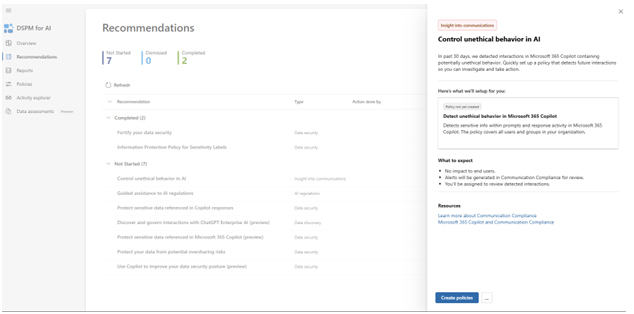

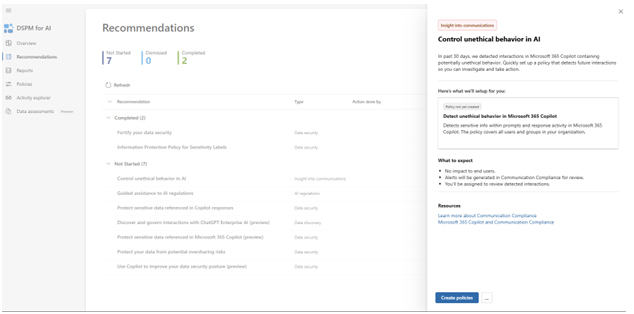

Recommendations

In the Recommendations section, you’ll find insights on the best things you can do right now, They’re automatically generated based on your organisation’s current security posture. The system will track what you’ve completed and what you’re yet to tackle.

Things you might find here are:

- Suggestions for fortifying data security where vulnerabilities have been detected

- Recommendations to bring in specific policies that prevent users from accessing unapproved AI tools

- Alerts highlighting departments or teams that are showing particularly high-risk behaviour

- Step-by-step guidance for addressing identified security gaps

One example shown on Microsoft’s Purview blog is how the system can recommend you control unethical behaviour in AI. It can detect someone using Copilot in a way that matches a certain classifier tag (“regulatory collusion, stock manipulation, unauthorised disclosure, money laundering, corporate sabotage, sexual, violence, hate” etc.) and notify you, so you can decide on how to proceed.

Reports

The Reports section gives you detailed analytics on AI adoption and usage within your business. You’ll find some handy graphs and visualisations on things like which AI tools are being used most frequently (for example, you might see increasing use of Copilot, ChatGPT or other services).

You can see here the user adoption rates over time, allowing you to track how quickly AI tools are spreading through your organisation on a departmental basis.

You can also get data on unprotected sensitive assets—those that aren’t covered by a DLP (data loss prevention) policy that stops their exfiltration, or those that don’t have a sensitivity label that controls access to them.

Policies

Policies are at the heart of Microsoft Purview’s data protection capabilities. In this section, you can create and apply various policies to restrict users copying and pasting data into AI tools (or just alert you when it happens).

Here, you can manage:

- Data leak prevention policies that automatically detect when sensitive information (like credit card numbers, passport details, or proprietary code) is being copied into AI tools and can either block the action or provide a warning to users

- Tool usage policies that allow you to specify which AI tools are approved for business use and alert or block when employees attempt to use non-sanctioned services

- Conditional access policies that enable different levels of AI tool access based on user roles, departments, or data sensitivity levels

- Data classification policies that automatically identify and tag sensitive information across your organisation, making it easier to control what can be shared with external AI services

- Notification policies that alert security teams in real-time when high-risk activities are detected, allowing for swift intervention

These policies can be customised with different severity levels, from simply logging activities so you can review them later, to actively preventing data from leaving your secure environment. You can potentially start with looser monitoring policies to understand usage patterns before implementing stricter controls.

Activity explorer

Here you’ll find similar information to the above sections but drilled down into more granular detail about specific actions taken by specific users.

You can find:

- Detailed logs showing where users have used AI tools and what kind of information they’ve inputted

- Filters that let you drill down by user, department, AI service, or time period

- The ability to see exactly which information was shared with external AI services

- Indicators highlighting when sensitive information has potentially been exposed

- Timeline views that help identify unusual patterns or potential security incidents

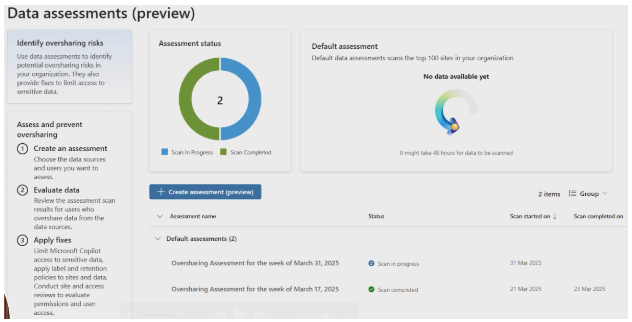

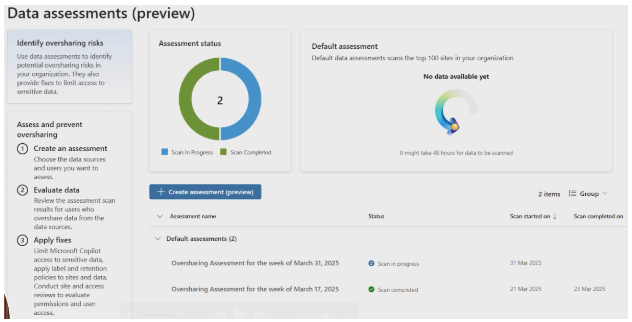

Data assessments

This section is currently in preview (beta), but you can still make use of it today. The Data Assessments section uses AI-based algorithms to identify when oversharing is present in your organisation.

This feature automatically scans your SharePoint sites and other document repositories to identify potential security risks. It then conducts thorough risk assessments of documents and applications that might have been inappropriately shared outside your secure environment.

Based on these assessments, you’ll receive tailored recommendations for improving your overall data security posture. Over time, you can track trending data to see if your security measures are becoming more or less effective—meaning you can refine your approach continuously.